The Definitive Guide

Jacqueline Weber

Extensive review of early reference manualAndrea Hörster

Extensive review of early reference manualKatrina Dlugosch

Draft for section on preprocessing of ESTs in EST manualMIRA Version V5rc1

Copyright © 2018 Bastien Chevreux

This documentation is licensed under the Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/3.0/ or send a letter to Creative Commons, 171 Second Street, Suite 300, San Francisco, California, 94105, USA.

Table of Contents

- Preface

- 1. Introduction to MIRA

- 2. Installing MIRA

- 3. Preparing data

- 4. Cookbook: de-novo genome assemblies

- 5. Cookbook: Mapping

- 6. Cookbook: de-novo EST / RNASeq assemblies

- 7. Working with the results of MIRA

- 8. Utilities in the MIRA package

- 9. MIRA 5 reference manual

- 10. Assembly of hard genome or EST / RNASeq projects

- 11. Description of sequencing technologies

- 12. Some advice when going into a sequencing project

- 13. Bits and pieces

- 14. Frequently asked questions

- 15. The MAF format

- 16. Log and temporary files used by MIRA

List of Figures

- 1.1. How MIRA learns from misassemblies (1)

- 1.2. How MIRA learns from misassemblies (2)

- 1.3. How MIRA learns from misassemblies (3)

- 1.4. Slides presenting the repeat locator at the GCB 99

- 1.5. Slides presenting the Edit automatic Sanger editor at the GCB 99

- 1.6. Sanger assembly without EdIt automatic editing routines

- 1.7. Sanger assembly with EdIt automatic editing routines

- 1.8. 454 assembly without 454 automatic editing routines

- 1.9. 454 assembly with 454 automatic editing routines

- 1.10. Coverage of a contig.

- 1.11. Repetitive end of a contig

- 1.12. Non-repetitive end of a contig

- 1.13. MIRA pointing out problems in hybrid assemblies (1)

- 1.14. Coverage equivalent reads (CERs) explained.

- 1.15. Coverage equivalent reads let SNPs become very visible in assembly viewers

- 1.16. SNP tags in a MIRA assembly

- 1.17. Tag pointing out a large deletion in a MIRA mapping assembly

- 7.1. Format conversions with miraconvert

- 7.2. Conversions needed for other tools.

- 7.3. Join at a repetitive site which should not be performed due to missing spanning templates.

- 7.4. Join at a repetitive site which should be performed due to spanning templates being good.

- 7.5. Pseudo-repeat in 454 data due to sequencing artifacts

- 7.6. "SROc" tag showing a SNP position in a Solexa mapping assembly.

- 7.7. "SROc" tag showing a SNP/indel position in a Solexa mapping assembly.

- 7.8. "MCVc" tag (dark red stretch in figure) showing a genome deletion in Solexa mapping assembly.

- 7.9. An IS150 insertion hiding behind a WRMc and a SRMc tags

- 7.10. A 16 base pair deletion leading to a SROc/UNsC xmas-tree

- 7.11. An IS186 insertion leading to a SROc/UNsC xmas-tree

- 11.1. The Solexa GGCxG problem.

- 11.2. The Solexa GGC problem, forward example

- 11.3. The Solexa GGC problem, reverse example

- 11.4.

A genuine place of interest almost masked by the

GGCxGproblem. - 11.5. Example for no GC coverage bias in 2008 Solexa data.

- 11.6. Example for GC coverage bias starting Q3 2009 in Solexa data.

- 11.7. Example for GC coverage bias, direct comparison 2008 / 2010 data.

- 11.8. Example for good IonTorrent data (100bp reads)

- 11.9. Example for problematic IonTorrent data (100bp reads)

- 11.10. Example for a sequencing direction dependent indel

“How much intelligence does one need to sneak upon lettuce? ” | ||

| --Solomon Short | ||

![[Note]](images/note.png) | Note |

|---|---|

A quick note at the start: I am scaling back the scope I recommend MIRA for. For genome de-novo assemblies or mapping projects, haploid organisms up to 20 to 40 megabases should be the limit. Do not use MIRA if you have PacBio or Oxford Nanopore reads. Then again, for polishing those assemblies with Illumina data, MIRA is really good. For mapping projects, do not use MIRA if you expect splicing like, e.g., RNASeq against an eukaryotic genome. Lastly, Illumina projects with more than 40 to 60 million reads start to be so resource intensive that you might be better served with other assemblers or mapping programs. I know some people use MIRA for de-novo RNASeq with 300 million reads because they think it's worth it, but they wait more than a month then for the calculation to finish. |

This "book" is actually the result of an exercise in self-defense. It contains texts from several years of help files, mails, postings, questions, answers etc.pp concerning MIRA and assembly projects one can do with it.

I never really intended to push MIRA. It started out as a PhD thesis and I subsequently continued development when I needed something to be done which other programs couldn't do at the time. But MIRA has always been available as binary on the Internet since 1999 ... and as Open Source since 2007. Somehow, MIRA seems to have caught the attention of more than just a few specialised sequencing labs and over the years I've seen an ever growing number of mails in my inbox and on the MIRA mailing list. Both from people having been "since ever" in the sequencing business as well as from labs or people just getting their feet wet in the area.

The help files -- and through them this book -- sort of reflect this development. Most of the chapters[1] contain both very specialised topics as well as step-by-step walk-throughs intended to help people to get their assembly projects going. Some parts of the documentation are written in a decidedly non-scientific way. Please excuse, time for rewriting mails somewhat lacking, some texts were re-used almost verbatim.

The last few years have seen tremendous change in the sequencing technologies and MIRA 5 reflects that: core data structures and routines had to be thrown overboard and replaced with faster and/or more versatile versions suited for the broad range of technologies and use-cases I am currently running MIRA with.

While other programs are nowadays better suited for many assembly tasks -- think SPADES for larger Illumina data sets or Falcon / Canu for PacBio -- I still regularly come back to MIRA when accuracy is important or catch the above mentioned programs doing something silly.

Nothing is perfect, and both MIRA and this documentation (even if it is rather pompously called Definitive Guide) are far from it. If you spot an error either in MIRA or this manual, feel free to report it. Or, even better, correct it if you can. At least with the manual files it should be easy: they're basically just some decorated text files.

I hope that MIRA will be as useful to you as it has been to me. Have a lot of fun with it.

Berlin, Feb 2019

Bastien Chevreux

[1] Avid readers of David Gerrold will certainly recognise the quotes from his books at the beginning of each chapter

Table of Contents

- 1.1. What is MIRA?

- 1.2. What to read in this manual and where to start reading?

- 1.3. The MIRA quick tour

- 1.4. For which data sets to use MIRA and for which not

- 1.5. Any special features I might be interested in?

- 1.5.1. MIRA learns to discern non-perfect repeats, leading to better assemblies

- 1.5.2. MIRA has integrated editors for data from Sanger, 454, IonTorrent sequencing

- 1.5.3. MIRA lets you see why contigs end where they end

- 1.5.4. MIRA tags problematic decisions in hybrid assemblies

- 1.5.5. MIRA for polishing of PacBio or ONT assemblies

- 1.5.6. MIRA allows older finishing programs to cope with amount data in Illumina mapping projects

- 1.5.7. MIRA tags SNPs and other features, outputs result files for biologists

- 1.5.8. MIRA has ... much more

- 1.6. Versions, Licenses, Disclaimer and Copyright

- 1.7. Getting help / Mailing lists / Reporting bugs

- 1.8. Author

- 1.9. Miscellaneous

“Half of being smart is to know what you're dumb at. ” | ||

| --Solomon Short | ||

MIRA is a multi-pass DNA sequence data assembler/mapper for whole genome and EST/RNASeq projects. MIRA assembles/maps reads gained by

electrophoresis sequencing (aka Sanger sequencing)

Illumina (Solexa) sequencing

Less so: 454 pyro-sequencing (GS20, FLX or Titanium)

Less so: Ion Torrent

into contiguous sequences (called contigs). One can use the sequences of different sequencing technologies either in a single assembly run (a true hybrid assembly) or by mapping one type of data to an assembly of other sequencing type (a semi-hybrid assembly (or mapping)) or by mapping a data against consensus sequences of other assemblies (a simple mapping).

The MIRA acronym stands for Mimicking Intelligent Read Assembly and the program pretty well does what its acronym says (well, most of the time anyway). It is the Swiss army knife of sequence assembly that I've used and developed during the past 20 years to get assembly jobs I work on done efficiently - and especially accurately. That is, without me actually putting too much manual work into it.

Over time, other labs and sequencing providers have found MIRA useful for assembly of extremely 'unfriendly' projects containing lots of repetitive sequences. As always, your mileage may vary.

At the last count, this manual had almost 200 pages and this might seem a little bit daunting. However, you very probably do not need to read everything.

You should read most of this introductional chapter though: e.g.,

the part with the MIRA quick tour

the part which gives a quick overview for which data sets to use MIRA and for which not

the part which showcases different features of MIRA (lots of screen shots!)

where and how to get help if things don't work out as you expected

After that, reading should depend on the type of data you intend to work with: there are specific chapters for assembly of de-novo, of mapping and of EST / RNASeq projects. They all contain an overview on how to define your data and how to launch MIRA for these data sets. There is also chapter on how to prepare data sets from specific sequencing technologies.

The chapter on working with results of MIRA should again be of general interest to everyone. It describes the structure of output directories and files and gives first pointers on what to find where. Also, converting results into different formats -- with and without filtering for specific needs -- is covered there.

As the previously cited chapters are more introductory in their nature, they do not go into the details of MIRA parametrisation. While MIRA has a comprehensive set of standard settings which should be suited for a majority of assembly tasks, the are more than 150 switches / parameters with which one can fine tune almost every aspect of an assembly. A complete description for each and every parameter and how to correctly set parameters for different use cases and sequencing technologies can be found in the reference chapter.

As not every assembly project is simple, there is also a chapter with tips on how to deal with projects which turn out to be "hard." It certainly helps if you at least skim through it even if you do not expect to have problems with your data ... it contains a couple of tricks on what one can see in result files as well as in temporary and log files which are not explained elsewhere.

MIRA comes with a number of additional utilities which are described in an own chapter. While the purpose of miraconvert should be quite clear quite quickly, the versatility of use cases for mirabait might surprise more than one. Be sure to check it out.

As from time to time some general questions on sequencing are popping up on the MIRA talk mailing list, I have added a chapter with some general musings on what to consider when going into sequencing projects. This should be in no way a replacement for an exhaustive talk with a sequencing provider, but it can give a couple of hints on what to take care of.

There is also a FAQ chapter with some of the more frequently asked questions which popped up in the past few years.

Finally, there are also chapters covering some more technical aspects of MIRA: the MAF format and structure / content of the tmp directory have own chapters.

Complete walkthroughs ... are lacking at the moment for MIRA 4. In the MIRA 3 manual I had them, but so many things have changed (at all levels: MIRA, the sequencing technologies, data repositories) that I did not have time to update them. I probably will need quite some time to write new ones. Feel free to send me some if you are inclined to help fellow scientists.

Input can be in various formats like Staden experiment (EXP), Sanger CAF, FASTA, FASTQ or PHD file. Ancillary data containing additional information helpful to the assembly as is contained in, e.g. NCBI traceinfo XML files or Staden EXP files, is also honoured. If present, base qualities in phred style and SCF signal electrophoresis trace files are used to adjudicate between or even correct contradictory stretches of bases in reads by either the integrated automatic EdIt editor (written by Thomas Pfisterer) or the assembler itself.

MIRA was conceived especially with the problem of repeats in genomic data and SNPs in transcript (EST / RNASeq) data in mind. Considerable effort was made to develop a number of strategies -- ranging from standard clone-pair size restrictions to discovery and marking of base positions discriminating the different repeats / SNPs -- to ensure that repetitive elements are correctly resolved and that misassemblies do not occur.

The resulting assembly can be written in different standard formats like CAF, Staden GAP4 directed assembly, ACE, HTML, FASTA, simple text or transposed contig summary (TCS) files. These can easily be imported into numerous finishing tools or further evaluated with simple scripts.

The aim of MIRA is to build the best possible assembly by

having a more or less full overview on the whole project at any time of the assembly, i.e. knowledge of almost all possible read-pairs in a project,

using high confidence regions (HCRs) of several aligned read-pairs to start contig building at a good anchor point of a contig, extending clipped regions of reads on a 'can be justified' basis.

using all available data present at the time of assembly, i.e., instead of relying on sequence and base confidence values only, the assembler will profit from trace files containing electrophoresis signals, tags marking possible special attributes of DNA, information on specific insert sizes of read-pairs etc.

having 'intelligent' contig objects accept or refuse reads based on the rate of unexplainable errors introduced into the consensus

learning from mistakes by discovering and analysing possible repeats differentiated only by single nucleotide polymorphisms. The important bases for discriminating different repetitive elements are tagged and used as new information.

using the possibility given by the integrated automatic editor to correct errors present in contigs (and subsequently) reads by generating and verifying complex error hypotheses through analysis of trace signals in several reads covering the same area of a consensus,

iteratively extending reads (and subsequently) contigs based on

additional information gained by overlapping read pairs in contigs and

corrections made by the automated editor.

MIRA was part of a bigger project that started at the DKFZ (Deutsches Krebsforschungszentrum, German Cancer Research Centre) Heidelberg in 1997: the "Bundesministerium für Bildung, Wissenschaft, Forschung und Technologie" supported the PhD thesis of Thomas and myself by grant number 01 KW 9611. Beside an assembler to tackle difficult repeats, the grant also supported the automated editor / finisher EdIt package -- written by Thomas Pfisterer. The strength of MIRA and EdIt is the automatic interaction of both packages which produces assemblies with less work for human finishers to be done.

I'd like to thank everybody who reported bugs to me, pointed out problems, sent ideas and suggestions they encountered while using the predecessors. Please continue to do so, the feedback made this third version possible.

As a general rule of thumb: if you have an organism with more than 100 to 150 megabases or more than 20 to 40 million reads, you might want to try other assemblers first.

For genome assembly, the version 5 series of MIRA have been reported to work on projects with something like a million Sanger reads (~80 to 100 megabases at 10x coverage), five to ten million 454 Titanium reads (~100 megabases at 20x coverage) and 20 to 40 million Illumina reads (enough for de-novo of a bacterium or a small eukaryote with 76mers to 300mers).

Provided you have the memory, MIRA is expected to work in de-novo mode with

Sanger reads: 5 to 10 million

454 reads: 5 to 15 million

Ion Torrent reads: 5 to 15 million

Illumina reads: in normal operation, up to 40 million reads. Some people use it on up to 300 million, but you'll need a really big machine and month of computation time ... I do not recommend that.

and "normal" coverages, whereas "normal" would be at no more than 50x to 70x for genome projects. Higher coverages will also work, but may create somewhat larger temporary files without heavy parametrisation. Lower coverages (<4x for Sanger, <10x for 454, < 10x for IonTorrent) also need special attention in the parameter settings.

As the complexity of mapping is a lot lower than de-novo, one can basically double (perhaps even triple) the number of reads compared to 'de-novo'. The limiting factor will be the amount of RAM though, and MIRA will also need lots of it if you go into eukaryotes.

The main limiting factor regarding time will be the number of reference sequences (backbones) you are using. MIRA being pedantic during the mapping process, it might be a rather long wait if you have more than 40 megabase of reference sequences.

The default values for MIRA should allow it to work with many EST and RNASeq data sets, sometimes even from non-normalised libraries. For extreme coverage cases however (like, something with a lot of cases at and above 10k coverage), one would perhaps want to resort to data reduction routines before feeding the sequences to MIRA.

On the other hand, recent developments of MIRA were targeted at making de-novo RNASeq assembly of non-normalised libraries liveable, and indeed I now regularly use MIRA for data sets with up to 50 million Illumina 250bp paired-end reads.

Mapping 50 to 60 million sequences against 20k to 30k gene reference sequences is doable, but slow and memory intensive.

![[Note]](images/note.png) | Note |

|---|---|

| For mapping against an eukaryotic genome: MIRA does not splice reads in mapping mode. While it is able to more or less handle insertion / deletions of up to 15% of a read length, splices larger than that will not be made: MIRA will still map the reads, but call a lot of SNPs in the parts which should have been spliced. |

A few perhaps.

![[Note]](images/note.png) | Note |

|---|---|

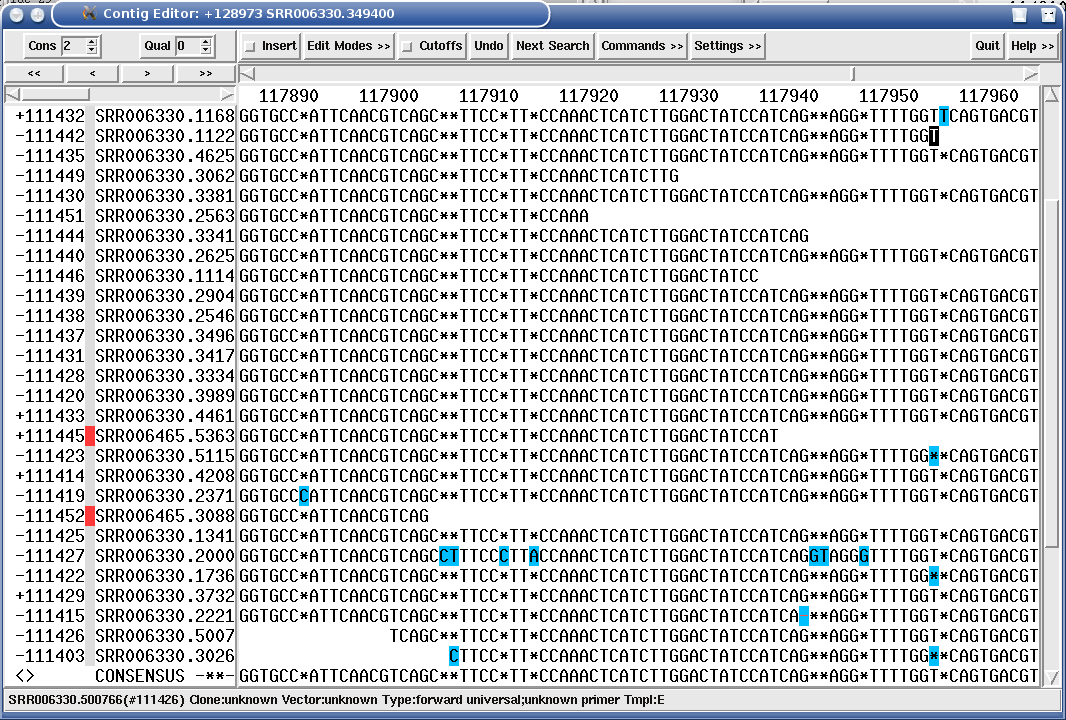

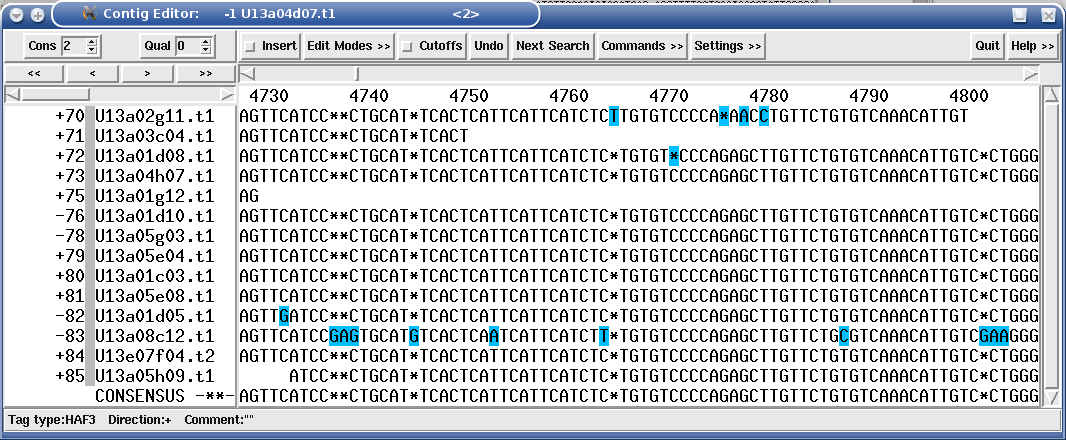

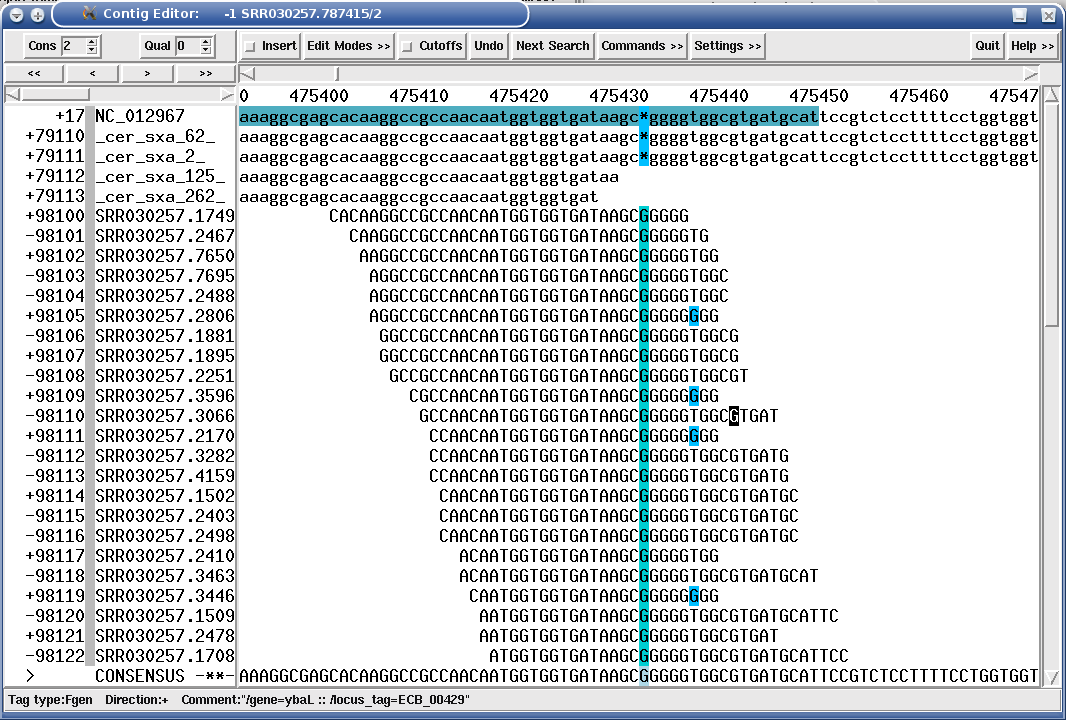

The screen shots in this section show data from assemblies produced with MIRA, but the visualisation itself is done in a finishing program named gap4. Some of the screen shots were edited for showing a special feature of MIRA. E.g., in the screen shots with Illumina data, quite some reads were left out of the view pane as else -- due to the amount of data -- these screen shots would need several pages for a complete printout. |

MIRA is an iterative assembler (it works in several passes) and acts a bit like a child when exploring the world: it explores the assembly space and is specifically parameterised to allow a couple of assembly errors during the first passes. But after each pass some routines (the "parents", if you like) check the result, searching for assembly errors and deduce knowledge about specific assemblies MIRA should not have ventured into. MIRA will then prevent these errors to re-occur in subsequent passes.

As an example, consider the following multiple alignment:

Figure 1.1. How MIRA learns from misassemblies (1). Multiple alignment after 1st pass with an obvious assembly error, notice the clustered columns discrepancies. Two slightly different repeats were assembled together.

|

These kind of errors can be easily spotted by a human, but are hard to prevent by normal alignment algorithms as sometimes there's only one single base column difference between repeats (and not several as in this example).

MIRA spots these things (even if it's only a single column), tags the base positions in the reads with additional information and then will use that information in subsequent passes. The net effect is shown in the next two figures:

Figure 1.2. Multiple alignment after last pass where assembly errors from previous passes have been resolved (1st repeat site)

|

Figure 1.3. Multiple alignment after last pass where assembly errors from previous passes have been resolved (2nd repeat site)

|

The ability of MIRA to learn and discern non-identical repeats from each other through column discrepancies is nothing new. Here's the link to a paper from a talk I had at the German Conference on Bioinformatics in 1999: http://www.bioinfo.de/isb/gcb99/talks/chevreux/

I'm sure you'll recognise the basic principle in figures 8 and 9. The slides from the corresponding talk also look very similar to the screen shots above:

You can get the talk with these slides here: http://chevreux.org/dkfzold/gcb99/bachvortrag_gcb99.ppt

In the first versions in 1999, the EdIt automatic Sanger sequence editor from Thomas Pfisterer was integrated into MIRA.

The routines used a combination of hypothesis generation/testing together with neural networks (trained on ABI and ALF traces) for signal recognition to discern between base calling errors and true multiple alignment differences. They wen back to the trace data to resolve potential conflicts and eventually recall bases using the additional information gained in a multiple alignment of reads.

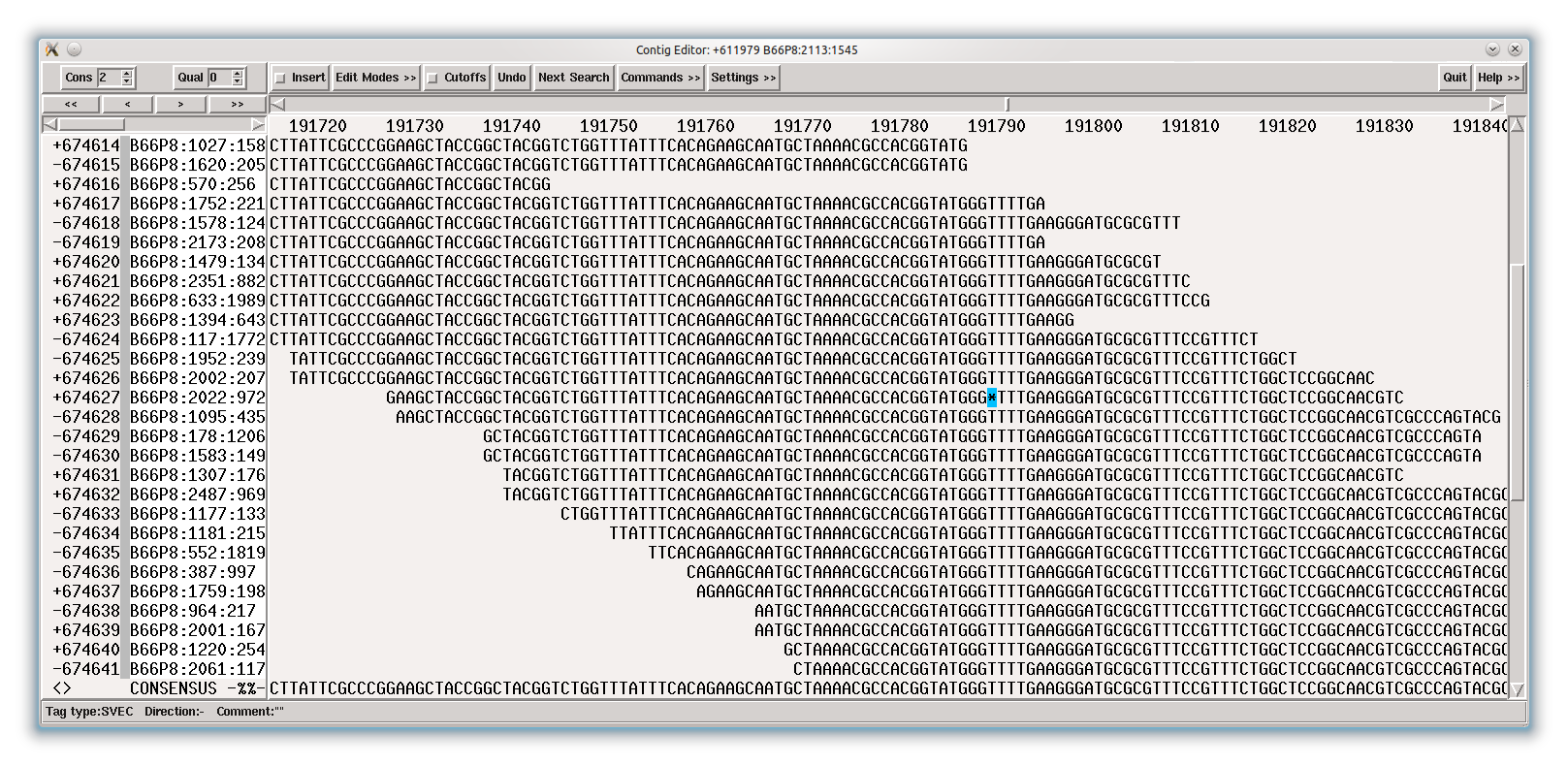

Figure 1.6. Sanger assembly without EdIt automatic editing routines. The bases with blue background are base calling errors.

|

Figure 1.7. Sanger assembly with EdIt automatic editing routines. Bases with pink background are corrections made by EdIt after assessing the underlying trace files (SCF files in this case). Bases with blue background are base calling errors where the evidence in the trace files did not show enough evidence to allow an editing correction.

|

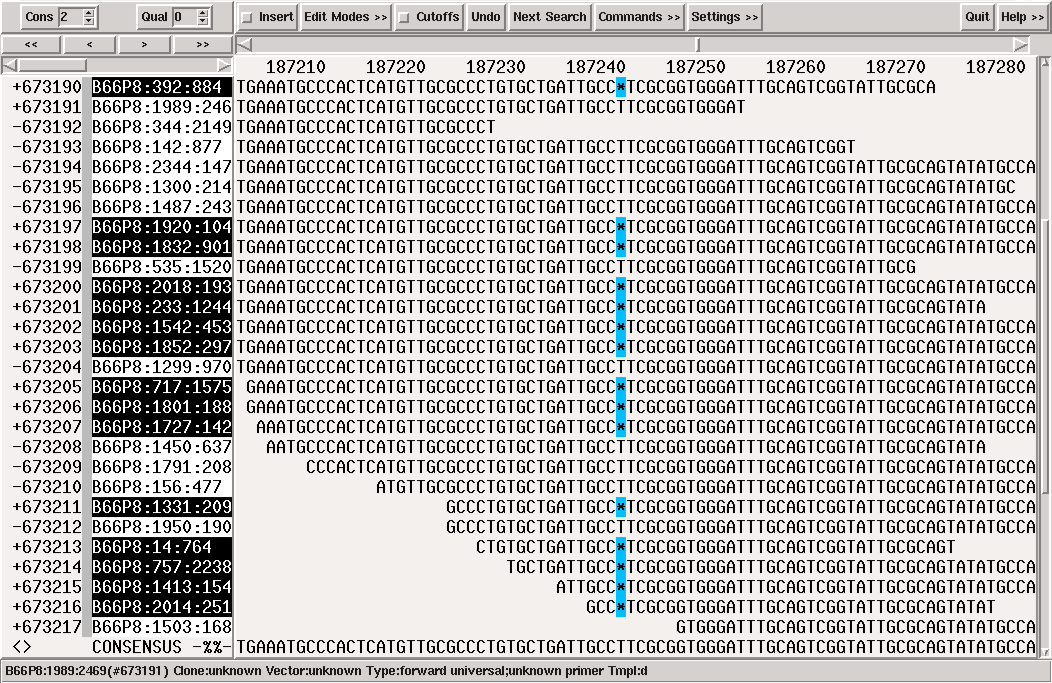

With the introduction of 454 and Illumina reads, MIRA also got in 2007 specialised editors to search and correct for typical 454 and Illumina sequencing problems, like the homopolymer run over-/undercalls or the Illumina GGCxG motif problem. These editors are now integrated into MIRA itself and are not part of EdIt anymore.

While not being paramount to the assembly quality, both editors provide additional layers of safety for the MIRA learning algorithm to discern non-perfect repeats even on a single base discrepancy. Furthermore, the multiple alignments generated by these two editors are way more pleasant to look at (or automatically analyse) than the ones containing all kind of gaps, insertions, deletions etc.pp.

A very useful feature for finishing are kmer (hash) frequency tags which MIRA sets in the assembly. Provided your finishing editor understands those tags (gap4, gap5 and consed are fine but there may be others), they'll give you precious insight where you might want to be cautious when joining to contigs or where you would need to perform some primer walking. MIRA colourises the assembly with the hash frequency (HAF) tags to show repetitiveness.

You will need to read about the HAF tags in the reference manual, but in a nutshell: the HAF5, HAF6 and HAF7 tags tell you potentially have repetitive to very repetitive read areas in the genome, while HAF2 tags will tell you that these areas in the genome have not been covered as well as they should have been.

As an example, the following figure shows the coverage of a contig.

The question is now: why did MIRA stop building this contig on the left end (left oval) and why on the right end (right oval).

Looking at the HAF tags in the contig, the answer becomes quickly clear: the left contig end has HAF5 tags in the reads (shown in bright red in the following figure). This tells you that MIRA stopped because it probably could not unambiguously continue building this contig. Indeed, if you BLAST the sequence at the NCBI, you will find out that this is an rRNA area of a bacterium, of which bacteria normally have several copies in the genome:

Figure 1.11. HAF5 tags (reads shown with red background) covering a contig end show repetitiveness as reason for stopping a contig build.

|

The right end of the same contig however ends in HAF3 tags (normal coverage, bright green in the next figure) and even HAF2 tags (below average coverage, pale green in the next image). This tells you MIRA stopped building the contig at this place simply because there were no more reads to continue. This is a perfect target for primer walking if you want to finish a genome.

Figure 1.12. HAF2 tags covering a contig end show that no more reads were available for assembly at this position.

|

Many people combine Sanger & 454 or 454 & Illumina to improve the sequencing quality of their project through two (or more) sequencing technologies. To reduce time spent in finishing, MIRA automatically tags those bases in a consensus of a hybrid assembly where reads from different sequencing technologies severely contradict each other.

The following example shows a hybrid 454 / Illumina assembly where reads from 454 (highlighted read names in following figure) were not sure whether to have one or two "G" at a certain position. The consensus algorithm would have chosen "two Gs" for 454, obviously a wrong decision as all Illumina reads at the same spot (the reads which are not highlighted) show only one "G" for the given position. While MIRA chose to believe Illumina in this case, it tagged the position anyway in case someone chooses to check these kind of things.

Figure 1.13. A "STMS" tag (Sequencing Technology Mismatch Solved, the black square base in the consensus) showing a potentially difficult decision in a hybrid 454 / Illumina de-novo assembly.

|

While MIRA is not really suited to assemble PacBio or Oxford Nanopore reads de-novo, it is very well suited to polish the results of such assemblies with Illumina reads in mapping projects.

TODO: example

Quality control is paramount when you do mutation analysis for biologists: I know they'll be on my doorstep the very next minute they found out one of the SNPs in the resequencing data wasn't a SNP, but a sequencing artefact. And I can understand them: why should they invest -- per SNP -- hours in the wet lab if I can invest a couple of minutes to get them data false negative rates (and false discovery rates) way below 1%? So, finishing and quality control for any mapping project is a must.

Both gap4 and consed start to have a couple of problems when projects have millions of reads: you need lots of RAM and scrolling around the assembly gets a test to your patience. Still, these two assembly finishing programs are amongst the better ones out there, although gap5 starts to quickly arrive in a state in which it allows itself to substitute to gap4.

So, MIRA reduces the number of reads in Illumina mapping projects without sacrificing information on coverage. The principle is pretty simple: for 100% matching reads, MIRA tracks coverage of every reference base and creates long synthetic, coverage equivalent reads (CERs) in exchange for the Illumina reads. Reads that do not match 100% are kept as own entities, so that no information gets lost. The following figure illustrates this:

Figure 1.14. Coverage equivalent reads (CERs) explained.

Left side of the figure: a conventional mapping with eleven reads of size 4 against a consensus (in uppercase). The inversed base in the lowest read depicts a sequencing error.

Right side of the figure: the same situation, but with coverage equivalent reads (CERs). Note that there are less reads, but no information is lost: the coverage of each reference base is equivalent to the left side of the figure and reads with differences to the reference are still present.

|

This strategy is very effective in reducing the size of a project. As an example, in a mapping project with 9 million Illumina 36mers, MIRA created a project with 1.7m reads: 700k CER reads representing ~8 million 100% matching Illumina reads, and it kept ~950k mapped reads as they had ≥ mismatch (be it sequencing error or true SNP) to the reference. A reduction of 80%, and numbers for mapping projects with Illumina 100bp reads are in a similar range.

Also, mutations of the resequenced strain now really stand out in the assembly viewer as the following figure shows:

Want to assemble two or several very closely related genomes without reference, but finding SNPs or differences between them?

Tired of looking at some text output from mapping programs and guessing whether a SNP is really a SNP or just some random junk?

MIRA tags all SNPs (and other features like missing coverage etc.) it finds so that -- when using a finishing viewer like gap4 or consed -- one can quickly jump from tag to tag and perform quality control. This works both in de-novo assembly and in mapping assembly, all MIRA needs is the information which read comes from which strain.

The following figure shows a mapping assembly of Illumina 36mers against a bacterial reference sequence, where a mutant has an indel position in an gene:

Figure 1.16. "SROc" tag (Snp inteR Organism on Consensus) showing a SNP position in a Illumina mapping assembly.

|

Other interesting places like deletions of whole genome parts are also directly tagged by MIRA and noted in diverse result files (and searchable in assembly viewers):

Figure 1.17. "MCVc" tag (Missing CoVerage in Consensus, dark red stretch in figure) showing a genome deletion in Illumina mapping assembly.

|

![[Note]](images/note.png) | Note |

|---|---|

| For bacteria -- and if you use annotated GenBank files as reference sequence -- MIRA will also output some nice lists directly usable (in Excel) by biologists, telling them which gene was affected by what kind of SNP, whether it changes the protein, the original and the mutated protein sequence etc.pp. |

Extensive possibilities to clip data if needed: by quality, by masked bases, by A/T stretches, by evidence from other reads, ...

Routines to re-extend reads into clipped parts if multiple alignment allows for it.

Read in ancillary data in different formats: EXP, NCBI TRACEINFO XML, SSAHA2, SMALT result files and text files.

Detection of chimeric reads.

Support for many different of input and output formats (FASTA, EXP, FASTQ, CAF, MAF, ...)

Automatic memory management (when RAM is tight)

Over 150 parameters to tune the assembly for a lot of use cases, many of these parameters being tunable individually depending on sequencing technology they apply to.

There are two kind of versions for MIRA that can be compiled form source files: production and development.

Production versions are from the stable branch of the source code. These versions are available for download from SourceForge.

Development versions are from the development branch of the source tree. These are also made available to the public and should be compiled by users who want to test out new functionality or to track down bugs or errors that might arise at a given location. Release candidates (rc) also fall into the development versions: they are usually the last versions of a given development branch before being folded back into the production branch.

MIRA has been put under the GPL version 2.

This program is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 2 of the License, or (at your option) any later version.

This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You should have received a copy of the GNU General Public License along with this program; if not, write to the Free Software Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301, USA

You may also visit http://www.opensource.org/licenses/gpl-2.0.php at the Open Source Initiative for a copy of this licence.

The documentation pertaining to MIRA is licensed under the Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/3.0/ or send a letter to Creative Commons, 171 Second Street, Suite 300, San Francisco, California, 94105, USA.

© 1997-2000 Deutsches Krebsforschungszentrum Heidelberg -- Dept. of Molecular Biophysics and Bastien Chevreux (for MIRA) and Thomas Pfisterer (for EdIt)

© 2001 (and later) Bastien Chevreux.

All rights reserved.

MIRA uses the excellent Expat library to parse XML files. Expat is Copyright © 1998, 1999, 2000 Thai Open Source Software Center Ltd and Clark Cooper as well as Copyright © 2001, 2002 Expat maintainers.

See http://www.libexpat.org/ and http://sourceforge.net/projects/expat/ for more information on Expat.

Please try to find an answer to your question by first reading the documents provided with the MIRA package (FAQs, READMEs, usage guide, guides for specific sequencing technologies etc.). It's a lot, but then again, they hopefully should cover 90% of all questions.

If you have a tough nut to crack or simply could not find what you were searching for, you can subscribe to the MIRA talk mailing list and send in your question (or comment, or suggestion), see http://www.chevreux.org/mira_mailinglists.html for more information on that. Now that the number of subscribers has reached a good level, there's a fair chance that someone could answer your question before I have the opportunity or while I'm away from mail for a certain time.

![[Note]](images/note.png) | Note |

|---|---|

Please very seriously consider using the mailing list before mailing me directly. Every question which can be answered by participants of the list is time I can invest in development and documentation of MIRA. I have a day job as bioinformatician which has nothing to do with MIRA and after work hours are rare enough nowadays. Furthermore, Google indexes the mailing list and every discussion / question asked on the mailing list helps future users as they show up in Google searches. Only mail me directly (bach@chevreux.org) if you feel that there's some information you absolutely do not want to share publicly. |

![[Note]](images/note.png) | Note |

|---|---|

| Subscribing to the list before sending mails to it is necessary as messages from non-subscribers will be stopped by the system to keep the spam level low. |

To report bugs or ask for new features, please use the GitHub issue tool at: http://github.com/bachev/mira/issues. This ensures that requests do not get lost and you get the additional benefit to automatically know when a bug has been fixed as I will not send separate emails, that's what bug trackers are there for.

Finally, new or intermediate versions of MIRA will be announced on the separate MIRA announce mailing list. Traffic is very low there as the only one who can post there is me. Subscribe if you want to be informed automatically on new releases of MIRA.

Bastien Chevreux (mira): <bach@chevreux.org>

MIRA can use automatic editing routines for Sanger sequences which were

written by Thomas Pfisterer (EdIt):

<t.pfisterer@dkfz-heidelberg.de>

Please use these citations:

- For mira

Chevreux, B., Wetter, T. and Suhai, S. (1999): Genome Sequence Assembly Using Trace Signals and Additional Sequence Information. Computer Science and Biology: Proceedings of the German Conference on Bioinformatics (GCB) 99, pp. 45-56.

Chevreux, B., Pfisterer, T., Drescher, B., Driesel, A. J., Müller, W. E., Wetter, T. and Suhai, S. (2004): Using the miraEST Assembler for Reliable and Automated mRNA Transcript Assembly and SNP Detection in Sequenced ESTs. Genome Research, 14(6)

Table of Contents

- 2.1. Where to fetch MIRA

- 2.2. Installing from a precompiled binary package

- 2.3. Integration with third party programs (gap4, consed)

- 2.4. Compiling MIRA yourself

- 2.5. Installation walkthroughs

- 2.6. Compilation hints for other platforms.

- 2.7. Notes for distribution maintainers / system administrators

“A problem can be found to almost every solution. ” | ||

| --Solomon Short | ||

Source packages are usually named

mira-

miraversion.tar.bz2

![[Note]](images/note.png) | Note |

|---|---|

For

The version string sometimes can have a different format:

|

Precompiled binary packages are named in the following way:

mira_

miraversion_OS-and-binarytype.tar.bz2

![[Note]](images/note.png) | Note |

|---|---|

The

|

SourceForge: https://sourceforge.net/projects/mira-assembler/files/MIRA/

There you will normally find two directories (stable and development), both with a couple of precompiled binaries -- usually for Linux and Mac OSX -- or the source package for compiling yourself.

Examples for packages at SourceForge:

mira_4.9.8_linux-gnu_x86_64_static.tar.bz2mira_4.9.8_OSX_snowleopard_x86_64_static.tar.bz2mira-4.9.8.tar.bz2

GitHub main page: https://github.com/bachev/mira

GitHub releases (go here for binary packages): https://github.com/bachev/mira/releases

GitHub contains the live source of MIRA as well as source packages and binary packages for selected versions. Two main branches are generally interesting to the public: "master" and "development".

To get a clone of the repository on on GitHub, do:

git clone https://github.com/bachev/mira.git

cd mira

./bootstrap.shOnce bootstrap has run through (see below for necessary prerequisites), the system is ready for a ./configure call.

The distributable package follows the one-directory-which-contains-everything-which-is-needed philosophy, but after unpacking and moving the package to its final destination, you need to run a script which will create some data files.

Download the package, unpack it.

Move the directory somewhere to your disk. Either to one of the "standard" places like, e.g.,

/opt/mira,/usr/local/miraor somewhere in your home directorySoftlink the binaries which are in the 'bin' directory into a directory which is in your shell PATH. Then have the shell reload the location of PATH binaries (either

hash -rfor sh/bash orrehashfor csh/tcsh.Alternatively, add the

bindirectory of the MIRA package to your PATH variable.Test whether the binaries are installed ok via

mirabait -vwhich should return with the current version you downloaded and installed.Now you need to run a script which will unpack and reformat some data needed by MIRA. That script is located in the

dbdatadirectory of the package and should be called with the name of the SLS file present in the same diretory like this:arcadia:/path/to/mirapkg$cd dbdataarcadia:/path/to/mirapkg/dbdata$ls -ldrwxr-xr-x 3 bach bach 4096 2016-03-18 14:31 mira-createsls -rwxr-xr-x 1 bach bach 2547 2015-12-14 04:33 mira-install-sls-rrna.sh -rw-r--r-- 1 bach bach 337 2016-01-01 14:50 README.txt lrwxrwxrwx 1 bach bach 10421035 2016-03-18 14:28 rfam_rrna-21-12.sls.gzarcadia:/path/to/mirapkg/dbdata$./mira-install-sls-rrna.sh rfam_rrna-21-12.sls.gzThis will take a minute or so. Then you're done for MIRA.

Additional scripts for special purposes are in the

scripts directory. You might or might not want to

have them in your $PATH.

Scripts and programs for MIRA from other authors are in the

3rdparty directory. Here too, you may or may not

want to have (some of them) in your $PATH.

MIRA sets tags in the assemblies that can be read and interpreted by the Staden gap4 package or consed. These tags are extremely useful to efficiently find places of interest in an assembly (be it de-novo or mapping), but both gap4 and consed need to be told about these tags.

Data files for a correct integration are delivered in the

support directory of the distribution. Please

consult the README in that directory for more information on how to

integrate this information in either of these packages.

![[Note]](images/note.png) | Note |

|---|---|

| As a general rule of thumb, you do NOT want to compile MIRA yourself. Fetch a precompiled binary if possible. |

Compiling the 5.x series of MIRA needs a C++14 compatible tool chain, i.e., systems starting from 2013/2014 should be OK. The requisites for compiling MIRA are:

gcc ≥ 6.1, with libstdc++6. Or clang ≥ 3.5.

You really want to use a simple installation package pre-configured for your system, but in case you want or have to install gcc or clang yourself, you will have a lot of fun.

BOOST library ≥ 1.48 (≥ 1.61 on OSX).

You really want to use a simple installation package pre-configured for your system, but in case you want or have to install BOOST yourself, please refer to http://www.boost.org/ for more information on the BOOST library.

![[Warning]](images/warning.png)

Warning Do NOT use a so called staged BOOST library, that will not work. - zlib. Should your system not have zlib installed or available as simple installation package, please see http://www.zlib.net/ for more information regarding zlib.

- GNU make. Should your system not have gmake installed or available as simple installation package, please see www.gnu.org/software/make/ for more information regarding GNU make.

- GNU flex ≥ 2.6.0. Should your system not have flex installed or available as simple installation package, please see http://flex.sourceforge.net/ for more information regarding flex.

- Expat library ≥ 2.0.1. Should your system not have the Expat library and header files already installed or available as simple installation package, you will need to download and install a yourself. Please see http://www.libexpat.org/ and http://sourceforge.net/projects/expat/ for information on how to do this.

- xxd. A small utility from the vim package.

For building the documentation, additional prerequisites are:

- help2man for manual pages

- xsltproc + docbook-xsl for HTML output

- a LaTEX system and dblatex for PDF output

When building from a GitHub checkout, additional prerequisites are a couple of additional tools:

- current automake and autoconf

- current libtool

If you're lucky, after fetching the source (and eventually calling ./bootstrap.sh if you worked from a GitHub checkout), all you need to do is basically:

arcadia:/path/to/mira-5.0.0$./configurearcadia:/path/to/mira-5.0.0$makearcadia:/path/to/mira-5.0.0$make install

This should install the following programs:

- mira

- miraconvert

- mirabait

- miramer

- miramem

Should the ./configure step fail for some reason or

another, you should get a message telling you at which step this

happens and and either install missing packages or tell

configure where it should search the packages it

did not find. See also next section or the system specific

walkthroughs in this chapter.

MIRA understands all standard autoconf configure arguments, the most

important one probably being --prefix= to tell

where you want to have MIRA installed. Please consult

./configure --help to get a full list of currently

supported arguments.

BOOST is maybe the most tricky library to get right in case it does

not come pre-configured for your system. The two main arguments for

helping to locate BOOST are

probably --with-boost=[ARG]

and --with-boost-libdir=LIB_DIR. Only if those

two fail, try using the other --with-boost-*= arguments

you will see from the ./configure help text.

The configure scripts honours the following MIRA specific arguments:

- --with-compiler=gcc/clang

-

Force the compilation to occur with the given compiler. The

default is clang on OSX systems and gcc on all other.

![[Note]](images/note.png)

Note Using homebrew installed GCC on OSX compiled, but the executable did not run (no idea why). Using clang on Linux is untested atm. - --with-brew

- Automatically configure the build to be made entirely with and from software (compiler, libraries etc.) installed via Homebrew. Works perfectly on OSX, untested on Linux.

- --enable-warnings

- Enables compiler warnings, useful only for developers, not for users.

- --enable-debug

- Lets the MIRA binary contain C/C++ debug symbols.

- --enable-mirastatic

- Builds static binaries which are easier to distribute. Some platforms (like OpenSolaris) might not like this and you will get an error from the linker.

- --enable-optimisations

- Instructs the configure script to set optimisation arguments for compiling (on by default). Switching optimisations off (warning, high impact on run-time) might be interesting only for, e.g, debugging with valgrind.

- --enable-publicquietmira

- Some parts of MIRA can dump additional debug information during assembly, setting this switch to "no" performs this. Warning: MIRA will be a bit chatty, using this is not recommended for public usage.

- --enable-developmentversion

- Using MIRA with enabled development mode may lead to extra output on stdout as well as some additional data in the results which should not appear in real world data

- --enable-boundtracking

- --enable-bugtracking

- Both flags above compile in some basic checks into mira that look for sanity within some functions: Leaving this on "yes" (default) is encouraged, impact on run time is minimal

You will need to install a couple of tools and libraries before compiling MIRA. Here's the recipe:

sudo apt-get install gcc make flex

sudo apt-get install libexpat1-dev libboost-all-devOnce this is done, you can unpack and compile MIRA. For a dynamically linked version, use:

tar xvjf mira-5.0.0.tar.bz2

cd mira-5.0.0

./configure

make && make installFor a statically linked version, just change the configure line from above into:

./configure --enable-mirastaticIn case you also want to build documentation yourself, you will need to install this in addition:

sudo apt-get install xsltproc docbook-xsl dblatexAnd building the documentation in PDF and HTML format is done like this:

make docs![[Note]](images/note.png) | Note |

|---|---|

People working on git checkouts of the MIRA source code will obviously need some more tools. Get them with this: |

![[Note]](images/note.png) | Note |

|---|---|

| This guide for OpenSUSE is outdated, but may give you clues on how to perform this on current systems. |

You will need to install a couple of tools and libraries before compiling MIRA. Here's the recipe:

sudo zypper install gcc-c++ boost-devel

sudo zypper install flex libexpat-devel zlib-develOnce this is done, you can unpack and compile MIRA. For a dynamically linked version, use:

tar xvjf mira-5.0.0.tar.bz2

cd mira-5.0.0

./configure

make && make installIn case you also want to build documentation yourself, you will need this in addition:

sudo zypper install docbook-xsl-stylesheets dblatex![[Note]](images/note.png) | Note |

|---|---|

People working on git checkouts of the MIRA source code will obviously need some more tools. Get them with this: |

![[Note]](images/note.png) | Note |

|---|---|

| This guide for Fedora is outdated, but may give you clues on how to perform this on current systems. |

You will need to install a couple of tools and libraries before compiling MIRA. Here's the recipe:

sudo yum -y install gcc-c++ boost-devel

sudo yum install flex expat-devel vim-common zlib-develOnce this is done, you can unpack and compile MIRA. For a dynamically linked version, use:

tar xvjf mira-5.0.0.tar.bz2

cd mira-5.0.0

./configure

make && make installIn case you also want to build documentation yourself, you will need this in addition:

sudo yum -y install docbook-xsl dblatex![[Note]](images/note.png) | Note |

|---|---|

People working on git checkouts of the MIRA source code will obviously need some more tools. Get them with this: |

These instructions are for OSX 10.14 (Mojave) and use Homebrew. There are other ways to do this (e.g., see the "compile everything from scratch"), but they are definetly more painful.

First, you will need to install the Apple XCode command line tools. This may be as easy as simply open a terminal and typing:

xcode-select --installHowever, on the machine I have, I got weird compilation errors and found https://github.com/frida/frida/issues/338#issuecomment-426777849 which told me to do this for things to work (don't ask me why, but this resolved my problems):

cd /Library/Developer/CommandLineTools/Packages/

open macOS_SDK_headers_for_macOS_10.14.pkgThen, if you do not already have it, install Homebrew. See https://brew.sh/.

Then go on and install with homebrew the LLVM clang compiler suite and a couple of other things needed:

brew install llvm boost flex expat

To compile from a git checkout, you will also need these:

brew install autoconf automake libtool git

To build the HTML documentation, this:

brew install docbook-xsl

And to build the PDF documentation, we need MacTex (huge download, around 1 GiB, 6 GiB on disk) and the dblatex package:

brew cask install mactexpip install dblatex

Finally, if you want to build small, rudimentary man pages:

brew install help2manNow unpack MIRA, configure it and compile:

tar xvf mira-5.0.0.tar.bz2cd mira-5.0.0./configure --with-brew --enable-debugmake -j 2

That's it for the dynamic version.

For building an almost static version, we need some trickery:

configure with the --enable-mirastatic argument),

then ask the build system to create a distribution. This will launch a

toolchain at the end of which you will get a bundle which contains

everything needed to run, both on OSX and other Unix systems.

./configure --with-brew --enable-mirastatic --enable-debugmakemake distrib

This lets you build a self-contained static MIRA binary. The only prerequisite here is that you have a working gcc with the minimum version described above. Please download all necessary files (expat, flex, etc.pp) and then simply follow the script below. The only things that you will want to change are the path used and, maybe, the name of some packages in case they were bumped up a version or revision.

Contributed by Sven Klages.

## whatever path is appropriatecd## expat/home/gls/SvenTemp/installtar zxvf## flexexpat-2.0.1.tar.gzcdexpat-2.0.1./configure--prefix=/home/gls/SvenTemp/expatmake && make installcd## boost/home/gls/SvenTemp/installtar zxvfflex-2.5.35.tar.gzcdflex-2.5.35./configure--prefix=/home/gls/SvenTemp/flexmake && make install cd/home/gls/SvenTemp/flex/binln -s flex flex++ export PATH=/home/gls/SvenTemp/flex/bin:$PATHcd## mira itself/home/gls/SvenTemp/installtar zxvfboost_1_48_0.tar.gzcdboost_1_48_0./bootstrap.sh --prefix=/home/gls/SvenTemp/boost./b2 installexport CXXFLAGS="-I/home/gls/SvenTemp/flex/include" cd/home/gls/SvenTemp/installtar zxvfmira-3.4.0.1.tar.gzcdmira-3.4.0.1./configure --prefix=/home/gls/SvenTemp/mira\ --with-boost=/home/gls/SvenTemp/boost\ --with-expat=/home/gls/SvenTemp/expat\ --enable-mirastatic make && make install

In case you do not want a static binary of MIRA, but a dynamically linked version, the following script by Robert Bruccoleri will give you an idea on how to do this.

Note that he, having root rights, puts all additional software in /usr/local, and in particular, he keeps updated versions of Boost and Flex there.

#!/bin/sh -x

make distclean

oze=`find . -name "*.o" -print`

if [[ -n "$oze" ]]

then

echo "Not clean."

exit 1

fi

export prefix=${BUILD_PREFIX:-/usr/local}

export LDFLAGS="-Wl,-rpath,$prefix/lib"

./configure --prefix=$prefix \

--enable-debug=yes \

--enable-mirastatic=no \

--with-boost-libdir=$prefix/lib \

--enable-optimisations \

--enable-boundtracking=yes \

--enable-bugtracking=yes \

--enable-extendedbugtracking=no

make

make installContributed by Thomas Vaughan

The system flex (/usr/bin/flex) is too old, but the devel/flex package from a recent pkgsrc works fine. BSD make doesn't like one of the lines in src/progs/Makefile, so use GNU make instead (available from pkgsrc as devel/gmake). Other relevant pkgsrc packages: devel/boost-libs, devel/boost-headers and textproc/expat. The configure script has to be told about these pkgsrc prerequisites (they are usually rooted at /usr/pkg but other locations are possible):

FLEX=/usr/pkg/bin/flex ./configure --with-expat=/usr/pkg --with-boost=/usr/pkgIf attempting to build a pkgsrc package of MIRA, note that the LDFLAGS passed by the pkgsrc mk files don't remove the need for the --with-boost option. The configure script complains about flex being too old, but this is harmless because it honours the $FLEX variable when writing out makefiles.

Depending on options/paramaters, the MIRA/mirabait binary may need

to load some additional data during the run. By default this data will

always be searched at this location:

LOCATION_OF_BINARY/../share/mira/...

That is: If the binary is, e.g.,

/opt/mira5/bin/mira with a softlink pointing from

/usr/local/bin/mira -> /opt/mira5/bin/mira

(because, e.g., /usr/local/bin may be by default in your

PATH variable), then the additional data will be searched in

/opt/mira5/share/mira/... and NOT in

/usr/local/share/mira/....

![[Note]](images/note.png) | Note |

|---|---|

| In short: since MIRA 4.9.6, moving the binary is not enough anymore. Take care to have the share directory in the right place, i.e., adjacent to the directory the MIRA binary lives in. |

Table of Contents

“Rome didn't fall in a day either.” | ||

| --Solomon Short | ||

Outside MIRA: transform .ab1 to .scf, perform sequencing vector clip (and cloning vector clip if used), basic quality clips.

Recommended program: gap4 (or rather pregap4) from the Staden 4 package.

Outside MIRA: convert SFF instrument from Roche to FASTQ, use sff_extract for that. In case you used "non-standard" sequencing procedures: clip away MIDs, clip away non-standard sequencing adaptors used in that project.

Outside MIRA: for heavens' sake: do NOT try to clip or trim by quality yourself. Do NOT try to remove standard sequencing adaptors yourself. Just leave Illumina data alone! (really, I mean it).

MIRA is much, much better at that job than you will probably ever be ... and I dare to say that MIRA is better at that job than 99% of all clipping/trimming software existing out there. Just make sure you use the [-CL:pec] (proposed_end_clip) option of MIRA.

![[Note]](images/note.png) | Note |

|---|---|

| The only exception to the above is if you (or your sequencing provider) used decidedly non-standard sequencing adaptors. Then it might be worthwhile to perform own adaptor clipping. But this will not be the case for 99% of all sequencing projects out there. |

Joining paired-ends: if you want to do this, feel free to use any tool which is out there (TODO: quick list). Just make sure they do not join on very short overlaps. For me, the minimum overlap is at least 17 bases, but I more commonly use at least 30.

Outside MIRA: need to convert BAM to FASTQ. Need to clip away non-standard sequencing adaptors if used in that project. Apart from that: leave the data alone.

Outside MIRA: you need to convert SRA format to FASTQ format. This is done using fastq-dump from the SRA toolkit from the NCBI. Make sure to have at least version 2.4.x of the toolkit. Last time I looked (March 2015), the software was at http://www.ncbi.nlm.nih.gov/Traces/sra/?view=software, the documentation for the whole toolkit was at http://www.ncbi.nlm.nih.gov/Traces/sra/?view=toolkit_doc, and for fastq-dump it was http://www.ncbi.nlm.nih.gov/Traces/sra/?view=toolkit_doc&f=fastq-dump

After extraction, proceed with preprocessing as described above, depending on the sequencing technology used.

For extracting Illumina data, use something like this:

arcadia:/some/path$fastq-dump -I --split-filessomefile.sra

![[Note]](images/note.png) | Note |

|---|---|

As fastq-dump unfortunately uses a pretty wasteful variant of the FASTQ format, you might want to reduce the file size for each FASTQ it produces by doing this: The above command performs an in-file replacement of unnecessary name and comments on the quality divider lines of the FASTQ. The exact translation of the sed is: do an in-file replacement (-i); starting on the third line, then every fourth line (3~4); substitute (s/); a line which starts (^); with a plus (+); and then can have any character (.); repeated any number of times including zero (*); until the end of the line ($); by just a single plus character (/+/). This alone reduces the file size of a typical Illumina data set with 100mers extracted from the SRA by about 15 to 20%. |

“The universe is full of surprises - most of them nasty.” | ||

| --Solomon Short | ||

This guide assumes that you have basic working knowledge of Unix systems, know the basic principles of sequencing (and sequence assembly) and what assemblers do.

While there are step by step instructions on how to setup your data and then perform an assembly, this guide expects you to read at some point in time

Before the assembly, Chapter 3: “Preparing data” to know what to do (or not to do) with the sequencing data before giving it to MIRA.

After the assembly, Chapter 7: “Working with the results of MIRA” to know what to do with the results of the assembly. More specifically, Section 7.1: “ MIRA output directories and files ”, Section 7.2: “ First look: the assembly info ”, Section 7.3: “ Converting results ”, Section 7.4: “ Filtering results ” and Section 7.5: “ Places of importance in a de-novo assembly ”.

And also Chapter 9: “MIRA 5 reference manual” to look up how manifest files should be written (Section 9.3.2: “ The manifest file: basics ” and Section 9.3.3: “ The manifest file: information on the data you have ” and Section 9.3.4: “ The manifest file: extended parameters ”), some command line options as well as general information on what tags MIRA uses in assemblies, files it generates etc.pp

Last but not least, you may be interested in some observations about the different sequencing technologies and the traps they may contain, see Chapter 11: “Description of sequencing technologies” for that. For advice on what to pay attention to before going into a sequencing project, have a look at Chapter 12: “Some advice when going into a sequencing project”.

This part will introduce you step by step how to get your data together for a simple de-novo assembly. I'll make up an example using an imaginary bacterium: Bacillus chocorafoliensis (or short: Bchoc). You collected the strain you want to assemble somewhere in the wild, so you gave the strain the name Bchoc_wt.

Just for laughs, let's assume you sequenced that bug with lots of more or less current sequencing technologies: Sanger, 454, Illumina, Ion Torrent and Pacific Biosciences.

You need to create (or get from your sequencing provider) the sequencing data in any supported file format. Amongst these, FASTQ and FASTA + FASTA-quality will be the most common, although the latter is well on the way out nowadays. The following walkthrough uses what most people nowadays get: FASTQ.

Create a new project directory (e.g. myProject)

and a subdirectory of this which will hold the sequencing data

(e.g. data).

arcadia:/path/tomkdir myProjectarcadia:/path/tocd myProjectarcadia:/path/to/myProject$mkdir data

Put the FASTQ data into that data directory so

that it now looks perhaps like this:

arcadia:/path/to/myProject$ls -l data-rw-r--r-- 1 bach users 263985896 2008-03-28 21:49 bchocwt_lane6.solexa.fastq

![[Note]](images/note.png) | Note |

|---|---|

I completely made up the file names above. You can name them anyway you

want. And you can have them live anywhere on the hard-disk, you do not

need to put them in this data directory. It's just

the way I do it ... and it's where the example manifest files a bit

further down in this chapter will look for the data files.

|

We're almost finished with the setup. As I like to have things neatly separated, I always create a directory called assemblies which will hold my assemblies (or different trials) together. Let's quickly do that:

arcadia:/path/to/myProject$mkdir assembliesarcadia:/path/to/myProject$mkdir assemblies/1sttrialarcadia:/path/to/myProject$cd assemblies/1sttrial

A manifest file is a configuration file for MIRA which tells it what type of assembly it should do and which data it should load. In this case we'll make a simple assembly of a genome with unpaired Illumina data

# Example for a manifest describing a genome de-novo assembly with # unpaired Illumina data # First part: defining some basic things # In this example, we just give a name to the assembly # and tell MIRA it should map a genome in accurate modeproject =# The second part defines the sequencing data MIRA should load and assemble # The data is logically divided into "readgroups" # here comes the unpaired Illumina dataMyFirstAssemblyjob =genome,denovo,accuratereadgroup =SomeUnpairedIlluminaReadsIGotFromTheLabdata =../../data/bchocwt_lane6.solexa.fastqtechnology =solexa

![[Note]](images/note.png) | Note |

|---|---|

Please look up the parameters of the manifest file in the main manual or the example manifest files in the following section. The ones above basically say: make an accurate denovo assembly of unpaired Illumina reads. |

Starting the assembly is now just a matter of a simple command line:

arcadia:/path/to/myProject/assemblies/1sttrial$miramanifest.conf >&log_assembly.txt

For this example - if you followed the walk-through on how to prepare the data - everything you might want to adapt in the first time are the following thing in the manifest file: options:

project= (for naming your assembly project)

Of course, you are free to change any option via the extended parameters, but this is the topic of another part of this manual.

This section will introduce you to manifest files for different use cases. It should cover the most important uses, but as always you are free to mix and match the parameters and readgroup definitions to suit your specific needs.

Taking into account that there may be a lot of combinations of sequencing technologies, sequencing libraries (shotgun, paired-end, mate-pair, etc.) and input file types (FASTQ, FASTA, GenBank, GFF3, etc.pp), the example manifest files just use Illumina and 454 as technologies, GFF3 as input file type for the reference sequence, FASTQ as input type for sequencing data ... and they do not show the multitude of more advanced features like, e.g., using ancillary clipping information in XML files, ancillary masking information in SSAHA2 or SMALT files etc.pp.

I'm sure you will be able to find your way by scanning through the corresponding section on manifest files in the reference chapter :-)

Well, we've seen that already in the section above, but here it is again ... but this time with 454 data.

# Example for a manifest describing a denovo assembly with # unpaired 454 data # First part: defining some basic things # In this example, we just give a name to the assembly # and tell MIRA it should map a genome in accurate modeproject =# The second part defines the sequencing data MIRA should load and assemble # The data is logically divided into "readgroups" # here's the 454 dataMyFirstAssemblyjob =genome,denovo,accuratereadgroup =SomeUnpaired454ReadsIGotFromTheLabdata =../../data/some454data.fastqtechnology =454

Hybrid de-novo assemblies follow the general manifest scheme: tell what you want in the first part, then simply add as separate readgroup the information MIRA needs to know to find the data and off you go. Just for laughs, here's a manifest for 454 shotgun with Illumina shotgun

# Example for a manifest describing a denovo assembly with # shotgun 454 and shotgun Illumina data # First part: defining some basic things # In this example, we just give a name to the assembly # and tell MIRA it should map a genome in accurate modeproject =# The second part defines the sequencing data MIRA should load and assemble # The data is logically divided into "readgroups" # now the shotgun 454 dataMyFirstAssemblyjob =genome,denovo,accuratereadgroup =# now the shotgun Illumina dataDataForShotgun454data =../../data/project454data.fastqtechnology =454readgroup =DataForShotgunIlluminadata =../../data/someillumina.fastqtechnology =solexa

When using paired-end data, you should know

the orientation of the reads toward each other. This is specific to sequencing technologies and / or the sequencing library preparation.

at which distance these reads should be. This is specific to the sequencing library preparation and the sequencing lab should tell you this.

In case you do not know one (or any) of the above, don't panic! MIRA is able to estimate the needed values during the assembly if you tell it to.

The following manifest shows you the most laziest way to define a paired data set by simply adding autopairing as keyword to a readgroup (using Illumina just as example):

# Example for a lazy manifest describing a denovo assembly with # one library of paired reads # First part: defining some basic things # In this example, we just give a name to the assembly # and tell MIRA it should map a genome in accurate modeproject =# The second part defines the sequencing data MIRA should load and assemble # The data is logically divided into "readgroups" # now the Illumina paired-end dataMyFirstAssemblyjob =genome,denovo,accuratereadgroup =DataIlluminaPairedLibautopairingdata =../../data/project_1.fastq ../../data/project_2.fastqtechnology =solexa

If you know the orientation of the reads and/or the library size, you can tell this MIRA the following way (just showing the readgroup definition here):

readgroup = DataIlluminaPairedEnd500Lib

data = ../../data/project_1.fastq ../../data/project_2.fastq

technology = solexa

template_size = 250 750

segment_placement = ---> <---In cases you are not 100% sure about, e.g., the size of the DNA template, you can also give a (generous) expected range and then tell MIRA to automatically refine this range during the assembly based on real, observed distances of read pairs. Do this with autorefine modifier like this:

template_size = 50 1000 autorefineThe following manifest file is an example for assembling with several different libraries from different technologies. Do not forget you can use autopairing or autorefine :-)

# Example for a manifest describing a denovo assembly with # several kinds of sequencing libraries # First part: defining some basic things # In this example, we just give a name to the assembly # and tell MIRA it should map a genome in accurate modeproject =# The second part defines the sequencing data MIRA should load and assemble # The data is logically divided into "readgroups" # now the Illumina paired-end dataMyFirstAssemblyjob =genome,denovo,accuratereadgroup =# now the Illumina mate-pair dataDataIlluminaForPairedEnd500bpLibdata =../../data/project500bp-1.fastq ../../data/project500bp-2.fastqtechnology =solexastrain =bchoc_se1template_size =250 750segment_placement =---> <---readgroup =# some Sanger data (6kb library)DataIlluminaForMatePair3kbLibdata =../../data/project3kb-1.fastq ../../data/project3kb-2.fastqtechnology =solexastrain =bchoc_se1template_size =2500 3500segment_placement =<--- --->readgroup =# some 454 dataDataForSanger6kbLibdata =../../data/sangerdata.fastqtechnology =sangertemplate_size =5500 6500segment_placement =---> <---readgroup =# some Ion Torrent dataDataFo454Pairsdata =../../data/454data.fastqtechnology =454template_size =8000 1200segment_placement =2---> 1--->readgroup =DataFoIonPairsdata =../../data/iondata.fastqtechnology =iontortemplate_size =1000 300segment_placement =2---> 1--->

MIRA will make use of ancillary information present in the manifest file. One of these is the information to which strain (or organism or cell line etc.pp) the generated data belongs.

You just need to tell in the manifest file which data comes from which strain. Let's assume that in the example from above, the "lane6" data were from a first mutant named bchoc_se1 and the "lane7" data were from a second mutant named bchoc_se2. Here's the manifest file you would write then:

# Example for a manifest describing a de-novo assembly with # unpaired Illumina data, but from multiple strains # First part: defining some basic things # In this example, we just give a name to the assembly # and tell MIRA it should map a genome in accurate modeproject =# The second part defines the sequencing data MIRA should load and assemble # The data is logically divided into "readgroups" # now the Illumina dataMyFirstAssemblyjob =genome,denovo,accuratereadgroup =DataForSE1data =../../data/bchocse_lane6.solexa.fastqtechnology =solexastrain =bchoc_se1readgroup =DataForSE2data =../../data/bchocse_lane7.solexa.fastqtechnology =solexastrain =bchoc_se2

![[Note]](images/note.png) | Note |

|---|---|

| While assembling de-novo with multiple strains is possible, the interpretation of results may become a bit daunting in some cases. For many scenarios it might therefore be preferable to successively use the data sets in own assemblies or mappings. |

This strain information for each readgroup is really the only change you need to perform to tell MIRA everything it needs for handling strains.

Table of Contents

“You have to know what you're looking for before you can find it.” | ||

| --Solomon Short | ||

This guide assumes that you have basic working knowledge of Unix systems, know the basic principles of sequencing (and sequence assembly) and what assemblers do.

While there are step by step instructions on how to setup your data and then perform an assembly, this guide expects you to read at some point in time

Before the mapping, Chapter 3: “Preparing data” to know what to do (or not to do) with the sequencing data before giving it to MIRA.

Generally, the Chapter 7: “Working with the results of MIRA” to know what to do with the results of the assembly. More specifically, Section 7.3: “ Converting results ” Section 7.6: “ Places of interest in a mapping assembly ” Section 7.7: “ Post-processing mapping assemblies ”

And also Chapter 9: “MIRA 5 reference manual” to look up how manifest files should be written (Section 9.3.2: “ The manifest file: basics ” and Section 9.3.3: “ The manifest file: information on the data you have ” and Section 9.3.4: “ The manifest file: extended parameters ”), some command line options as well as general information on what tags MIRA uses in assemblies, files it generates etc.pp

Last but not least, you may be interested in some observations about the different sequencing technologies and the traps they may contain, see Chapter 11: “Description of sequencing technologies” for that. For advice on what to pay attention to before going into a sequencing project, have a look at Chapter 12: “Some advice when going into a sequencing project”.

This part will introduce you step by step how to get your data together for a simple mapping assembly.

I'll make up an example using an imaginary bacterium: Bacillus chocorafoliensis (or short: Bchoc).

In this example, we assume you have two strains: a wild type strain of Bchoc_wt and a mutant which you perhaps got from mutagenesis or other means. Let's imagine that this mutant needs more time to eliminate a given amount of chocolate, so we call the mutant Bchoc_se ... SE for slow eater

You wanted to know which mutations might be responsible for the observed behaviour. Assume the genome of Bchoc_wt is available to you as it was published (or you previously sequenced it), so you resequenced Bchoc_se with Solexa to examine mutations.

You need to create (or get from your sequencing provider) the sequencing data in either FASTQ or FASTA + FASTA quality format. The following walkthrough uses what most people nowadays get: FASTQ.

Create a new project directory (e.g. myProject) and a subdirectory of this which will hold the sequencing data (e.g. data).

arcadia:/path/tomkdir myProjectarcadia:/path/tocd myProjectarcadia:/path/to/myProject$mkdir data

Put the FASTQ data into that data directory so that it now looks perhaps like this:

arcadia:/path/to/myProject$ls -l data-rw-r--r-- 1 bach users 263985896 2008-03-28 21:49 bchocse_lane6.solexa.fastq -rw-r--r-- 1 bach users 264823645 2008-03-28 21:51 bchocse_lane7.solexa.fastq

![[Note]](images/note.png) | Note |

|---|---|

I completely made up the file names above. You can name them anyway you

want. And you can have them live anywhere on the hard disk, you do not

need to put them in this data directory. It's just

the way I do it ... and it's where the example manifest files a bit further down

in this chapter will look for the data files.

|

The reference sequence (the backbone) can be in a number of different formats: GFF3, GenBank (.gb, .gbk, .gbf, .gbff), MAF, CAF, FASTA. The first three have the advantage of being able to carry additional information like, e.g., annotation. In this example, we will use a GFF3 file like the ones one can download from the NCBI.

![[Note]](images/note.png) | Note |

|---|---|

| TODO: Write why GFF3 is better and where to get them at the NCBI. |

So, let's assume that our wild type

strain is in the following file:

NC_someNCBInumber.gff3.

You do not need to copy the reference sequence to your directory, but I normally copy also the reference file into the directory with my data as I want to have, at the end of my work, a nice little self-sufficient directory which I can archive away and still be sure that in 10 years time I have all data I need together.

arcadia:/path/to/myProject$cp /somewhere/NC_someNCBInumber.gff3 dataarcadia:/path/to/myProject$ls -l data-rw-r--r-- 1 bach users 6543511 2008-04-08 23:53 NC_someNCBInumber.gff3 -rw-r--r-- 1 bach users 263985896 2008-03-28 21:49 bchocse_lane6.solexa.fastq -rw-r--r-- 1 bach users 264823645 2008-03-28 21:51 bchocse_lane7.solexa.fastq

We're almost finished with the setup. As I like to have things neatly separated, I always create a directory called assemblies which will hold my assemblies (or different trials) together. Let's quickly do that:

arcadia:/path/to/myProject$mkdir assembliesarcadia:/path/to/myProject$mkdir assemblies/1sttrialarcadia:/path/to/myProject$cd assemblies/1sttrial

A manifest file is a configuration file for MIRA which tells it what type of assembly it should do and which data it should load. In this case we have unpaired sequencing data which we want to map to a reference sequence, the manifest file for that is pretty simple:

# Example for a manifest describing a mapping assembly with # unpaired Illumina data # First part: defining some basic things # In this example, we just give a name to the assembly # and tell MIRA it should map a genome in accurate modeproject =# The second part defines the sequencing data MIRA should load and assemble # The data is logically divided into "readgroups" # first, the reference sequenceMyFirstMappingjob =genome,mapping,accuratereadgroup is_reference data =# now the Illumina data../../data/NC_someNCBInumber.gff3strain =bchoc_wtreadgroup =SomeUnpairedIlluminaReadsIGotFromTheLabdata =../../data/*fastqtechnology =solexastrain =bchoc_se

![[Note]](images/note.png) | Note |

|---|---|

Please look up the parameters of the manifest file in the main manual or the example manifest files in the following section. The ones above basically say: make an accurate mapping of Solexa reads against a genome; in one pass; the name of the backbone strain is 'bchoc_wt'; the data with the backbone sequence (and maybe annotations) is in a specified GFF3 file; for Solexa data: assign default strain names for reads which have not loaded ancillary data with strain info and that default strain name should be 'bchoc_se'. |

Starting the assembly is now just a matter of a simple command line:

arcadia:/path/to/myProject/assemblies/1sttrial$miramanifest.conf >&log_assembly.txt

For this example - if you followed the walk-through on how to prepare the data - everything you might want to adapt in the first time are the following thing in the manifest file: options:

project= (for naming your assembly project)

strain_name= to give the names of your reference and mapping strain

Of course, you are free to change any option via the extended parameters, but this is the topic of another part of this manual.

This section will introduce you to manifest files for different use cases. It should cover the most important uses, but as always you are free to mix and match the parameters and readgroup definitions to suit your specific needs.